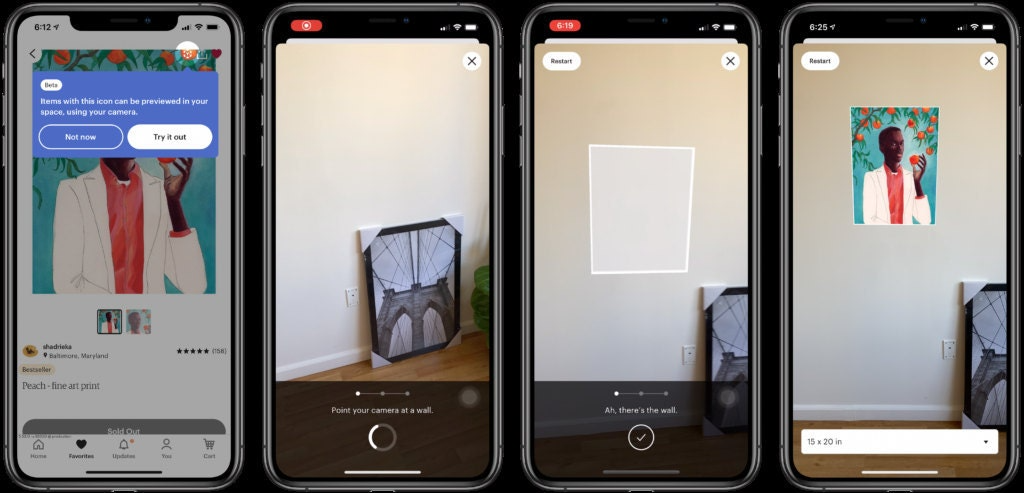

I was tech lead on a team at Etsy that built features to preview objects (eg. wall art, rugs, tables) in a user’s room.

For wall art, we display preview of the object on the user’s wall. For three-dimensional objects we display a translucent cube that shows the object’s size. This project was built in multiple stages with each stage released as an A/B test. We also tested several prototypes with users along the way.

My role on this project was to lead the backend implementation. One of the challenges we had was that very few listings have structured size information. Instead of well-defined size data, listings on Etsy had free-form text that described the listing. We developed an algorithm to infer the dimensions from listing data (titles, descriptions, product offerings). After experimenting with an ML model and several off-the-shelf tools, we found that a long regex expression that parsed size data from listings was a good approach. With this approach, we were able to achieve high accuracy (measured against human-annotated data) and cover ~70\% of listings in the wall art and furniture categories.

We also had complex challenges on the client, which you can read more about in our blog posts.

This project was highly visible internally and externally. Executives were frequently updated on our progress, and other parts of the organization (eg. marketing and seller support) coordinated with us to announce the feature to users.

Our press release was picked up by multiple news sites, including TechCrunch and The Verge.

Thanks to Han Cho, Mahreen Ijaz, Kate Matsumoto, Siri Mcclean, Pedro Michel, Jacob Van Order, and Evan Wolf who worked with me on this project.